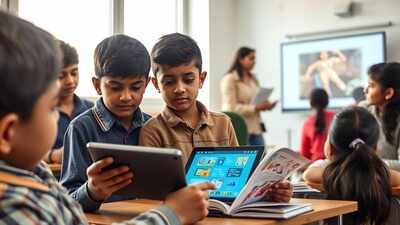

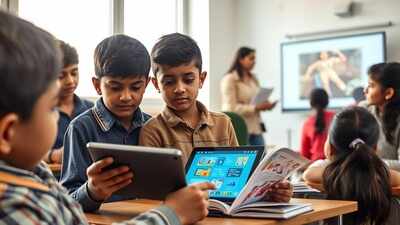

AI in classrooms: Friend or foe? Benefits, boundaries, and why learning is still a human act

The arrival of AI-enabled devices in schools—often called AI PCs—marks a shift from computers as passive portals to machines that can generate explanations, draft feedback and adapt tasks in real time. That possibility excites and alarms in equal measure. Used well, AI can help teachers manage wide learning gaps and crowded classrooms. Used poorly, it can deskill learners, monetise children’s attention and fragment already-stretched school budgets. The right question is not whether AI belongs in classrooms, but under what terms, with what boundaries, and to what end.

What an AI PC actually changes

Traditional classroom technology serves up content; AI PCs try to shape it. On-device models can summarise a chapter at different reading levels, propose practice questions, or flag misconceptions as a student types. For teachers, assistants can draft lesson scaffolds, design quick checks for understanding, and mark low-stakes work. None of this replaces the human craft of pedagogy. It does change the unit of time. Instead of waiting for the next class to intervene, a teacher can guide a student mid-task, with AI surfacing who needs help and why.Two design choices matter. First, processing on the device reduces dependence on unreliable connectivity and can limit data leaving the classroom. Second, the quality of prompts and task design becomes part of instructional planning. The school that treats prompting as a literacy to be taught—like writing or coding—will harvest far more value than one that treats AI as a magic add-on.

The benefits worth pursuing

Personalisation without isolation. AI can adjust the difficulty, pace and modality of practice while the teacher orchestrates discussion and projects. The gains come when individualised practice feeds back into collective learning—students use the next period to defend their solutions, not just consume another worksheet.Teacher workload relief. Planning, marking and admin eat hours. If AI handles first drafts and bulk feedback, teachers can spend more time conferencing with students, observing small groups and preparing richer tasks. Time is the scarce resource in schools; AI should buy it back for human interactions.Earlier detection of learning drift. Continuous, low-stakes signals can reveal when a learner is stuck, bored or guessing. Rather than labelling students, the point is triage: who needs a different explanation, a prerequisite review, or a challenge extension today.

The risks that demand guardrails

Erosion of thinking. If the device writes, plans and explains everything, the learner practises very little. Schools must distinguish assistance from outsourcing. Tasks should require visible reasoning: annotations, rough work, oral defences, and reflections that AI cannot convincingly fake without the student understanding the material.Data exposure and surveillance creep. Children generate sensitive patterns as they read, type and speak. Even with on-device processing, telemetry, backups and model updates can leak information. Default settings should minimise collection, keep data local where possible, and make retention short. Parents and students deserve plain-language notices explaining what is captured and how to opt out.Bias and misdirection. Generative models sometimes produce fluent nonsense or embed subtle stereotypes. Classroom use needs friction: citations or evidence prompts by default, cross-checks against approved sources, and teacher-controlled guardrails that limit certain outputs for younger learners.Equity and procurement. AI PCs are not cheap, and recurring costs—licences, model updates, device management—can rival the hardware itself. Without a funding plan, early adopters widen gaps. Districts should negotiate pooled licences, support shared device labs, and measure impact before expanding purchases.Assessment integrity. If a device can produce answers indistinguishable from a student’s, home assignments lose credibility. That pushes assessment towards performance tasks, viva-style checks, and monitored creation in class. It also argues for teaching students to use AI transparently: declare assistance, describe the steps taken, and submit artefacts that show process.

A practical framework for schools

1) Start with the problem, not the tool. Identify one or two pain points—reading comprehension gaps in middle school, feedback delays in high school writing, teacher time lost to grading. Pilot AI only where it plausibly shrinks those gaps in weeks, not years.2) Keep one foot on paper. Hybrid classrooms are resilient. When connectivity fails, learning should continue. Many tasks can begin on paper, move to AI for feedback, and come back to paper for reflection or assessment.3) Adopt “human-in-the-loop” as policy. Teachers remain accountable for final judgements. AI can propose marks and comments; teachers approve, edit or reject. Students can draft with AI; they must revise with evidence of their own thinking.4) Build a minimal governance stack.

- Use policy: what AI can and cannot be used for, by age band.

- Data policy: retention periods, parental access, audit logs.

- Content policy: guardrails for harmful or age-inappropriate outputs.

- Incident response: a playbook for hallucinations, bias complaints, or breaches.

5) Invest in teacher capability. Training should be classroom-anchored: model lessons, co-planning, exemplar prompts, and time to experiment. Pair early adopters with sceptics and make the evaluation public and honest.6) Measure what matters. Track reductions in teacher admin time, turnaround of feedback, student engagement during practice, and movement in specific skills. If gains are not visible within a term, stop, adapt or scale back.

What ‘good’ looks like

In a well-run AI classroom, the device is most active during practice and revision. Whole-class moments—explanations, debates, critiques—stay human. Students learn to ask better questions of the AI and of themselves. Teachers use analytics to group learners strategically, not to label them. Workflows are transparent: when AI helped, how it helped, and where the student’s own reasoning begins. Parents can see and understand the data trail. Procurement follows evidence from targeted pilots, not glossy demos.

Friend or foe?

AI is neither. It is a force multiplier that amplifies whatever a school already is. In thoughtful hands, it widens access to timely feedback, personalises practice without isolating learners, and frees teachers to focus on the parts of teaching only people can do—care, judgement, and inspiration. In careless hands, it can flatten curiosity, outsource thinking and turn classrooms into data farms.The choice is not ideological. It is architectural. Schools that set boundaries, teach with and about AI, and measure real gains will find a durable middle path. Those that skip the hard design work will discover that the very tool sold as a shortcut has made the long road to learning even longer.